As a previous Product Management Intern for Welfie, a key process in our workflow was rating the effectiveness of medical resources. However, it’s difficult, a process that takes around 5 minutes per resource and with over 300 resources to rate, and something needed to change. While it would’ve been nice to get start coding right away, I had to do some learning of my own beforehand.

Coding Basics - What is an API?

An API (Application Programming Interface) in coding is a bridge that allows different software applications or services to communicate and work with each other.

Analogy: Ordering from a restaurant.

A good way to think of it as a menu in a restaurant. When we want to order food, we don’t go straight to the chef but instead give tasks to the chef based on what you order in the menu. You (the developer or user) are the customer who wants to order food (perform a specific task or function) and the menu (API) lists the dishes available. You don’t need to know how the chef (the code behind the API) prepares the dish; you just need to know what dishes are available and how to order them.

For example, models like ChatGPT allow you to “order” tasks, while the code behind it executes your prompt without having to interact with any code. This allows you to avoid lots of complex code, while still being able to execute heavy tasks.

API Example - Google’s T5 Transformer

Google’s T5 transformer is one of these API’s, enabling a unified approach to various natural language processing (NLP) tasks. This T5 model leverages the transformer architecture, and distinguishes itself by treating all tasks as text-to-text transformations. During training, T5 learns to map input text to target text, regardless of the task, whether it’s translation, summarization, question answering, or others. T5’s most distinct factor lies in its ability to efficiently process vast amounts of text data, enabling it to achieve groundbreaking results across multiple NLP benchmarks while maintaining a flexible and scalable framework.

Using Transformers to Transform the Healthcare Sector

Let’s backtrack. With Welfie’s current issue of rating resources, I wanted to find a way to speed up this process. Thankfully, the T5 transformer model solves this, streamlining the process of digesting resources through the advanced text manipulation system. Before we start building, let’s recap on the use case.

Picture this:

Scenario: A healthcare professional needs to stay updated with the latest medical research findings but struggles to find time to read lengthy healthcare resources.

Solution: The healthcare worker utilizes the T5 model by inputting the resource or its link into the system. The tool generates a concise summary, highlighting key findings and insights within minutes.

Outcome: The healthcare worker saves valuable time by quickly grasping the essential information from the resource, enabling efficient decision-making and allowing for more resources to be rated in a short amount of time.

Now that we understand the use case, let’s get building.

Writing the Code

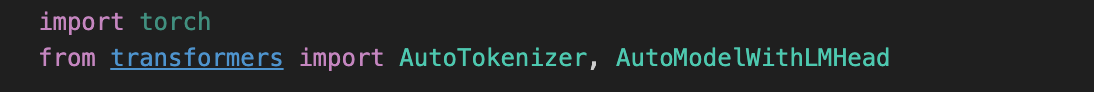

First, we need to import the API. We can do this by calling in the “transformers” library and importing the “AutoTokenizer” model which includes the pre-trained T5 base.

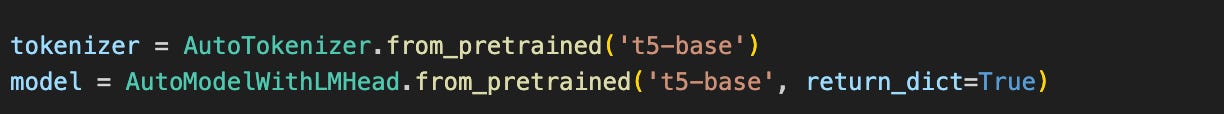

Next, we need to notify our transformer library of which model we want to load in. In this case, the AutoTokenizer class identifies and loads the correct tokenizer based on the provided model name or path. In this case, the model we want is t5-base, the base version of the T5 model.

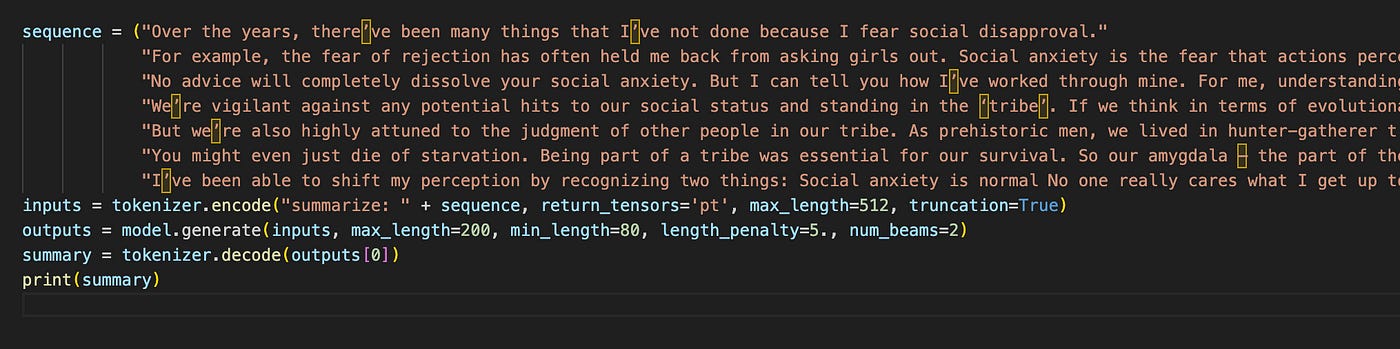

Finally, the fun part: inputting our text and parameters. First we define a variable called sequence that contains the input text that you want to summarize. In this case, we can copy and paste the text from a resource around social anxiety. Then, we call in the encoder to match the process of "summarize: ", indicating to the model that it should summarize the sequence. Lastly, we define our output parameters through the outputs variable, like max_length, min_length, length_penalty, and num_beams. The final step is the print(summary), which essentially pushes out out summarized text to our terminal for us to read.

To summarize, we called in the API of Google’s T5 Transformer Model, identified the specific model we are targeting inside of the library, and summarized our text blob by defining parameters around our desired summary.

To see the full code all together, you can find it here inside my Github repository.

Why Does This Work?

With Welfie’s main problem of reading and rating resources, this solution helps accelerate the rating process by developing a brief understanding for the reader through summarization. Instead of the reader having to read the resource (5+ minutes) to get a brief understanding and then rating it, the code greatly cuts down the time it takes the reader to understand the resource, allowing them to go straight to rating and making the process up to 93% faster.

Future Directions

While the code for the project was relatively simple, my main focus was executing the problem analysis → solution building of the product process. In the future, I hope to continue finding applications of AI and code out my own solutions to better understand the technical portion of the product process. While the rapidly emerging realm of AI seems daunting at first sight, slowly implementing advanced models into common workflow problems could be the next step in elevating the way we approach work in the future.